Seeing an API Rate Limit Exceeded error? Here's How to Fix It!

Encountering an "API Rate Limit Exceeded" error can be a significant roadblock in software development, abruptly halting your application's functionality and hindering progress. If you've experienced this frustrating message, you know how disruptive it can be. Rate limits are a standard mechanism employed by API providers to manage traffic, ensure fair usage, and maintain service quality.

We will explore the intricacies of API rate limits, their purpose, the various types you may encounter, and most importantly, the effective strategies to address them. We'll cover methods to identify, handle, and optimize your API interactions, enabling you to build robust applications that gracefully navigate rate limitations.

Understanding API Rate Limits#

Before diving into solutions, let's clarify what API rate limits are. At their core, they are restrictions imposed by API providers on how many requests a client (like your application) can make within a specified time frame. This timeframe can range from seconds to minutes, hours, or even days.

Rate limits are essential for several reasons. They protect the API from excessive or malicious usage that could overload the system and degrade performance for everyone. Rate limits guarantee that all users have equitable access to the API, preventing any single client from monopolizing resources. By controlling the flow of requests, rate limit thresholds help maintain a consistent and predictable level of service for all users.

Rate limits can be implemented in a few distinct ways. One common approach is request-based limits, which restrict the total number of requests allowed within a specific time period, regardless of the client's IP address. Another method is time-based limits, which define the maximum number of requests allowed per second, minute, hour, or any other specified time unit. IP-based limits restrict the number of requests originating from a particular IP address within a given time frame.

Understanding the specific type of rate limit imposed by an API provider is crucial for effectively managing and handling these limitations. Often, APIs combine different types of rate limits to create a comprehensive system that protects their resources and ensures optimal performance.

Identifying API Rate Limit Issues#

Detecting when you're experiencing API rate limiting is crucial for preventing disruptions in your application. Fortunately, there are clear signs and tools to help you spot these issues promptly.

Recognizing the “API Rate Limit Exceeded” Error#

The most obvious indicator of a rate limit problem is encountering the "API Rate Limit Exceeded" error message. This usually comes with a specific HTTP status code, typically 429 ("Too Many Requests"). However, some APIs might use different codes or custom error messages to communicate the same issue. Always refer to the API provider's documentation to understand their specific error responses.

Monitoring API Requests to Detect Rate Limit Issues#

While error messages are helpful, monitoring your API requests is key to proactive rate limit management. Detailed logging of your API interactions is an invaluable practice. This log should record timestamps of requests, the response status codes received, and any accompanying error messages. Over time, this creates a historical record that can help identify patterns and trends leading up to rate limit triggers.

Alongside logging, consider using real-time monitoring tools or dashboards. These provide visual representations of request rates, latency, and error occurrences. By observing these metrics, you can quickly spot anomalies or spikes in usage that might indicate you're approaching or exceeding rate limits. Some developers also find value in integrating error tracking services into their application. These services capture and aggregate errors, including rate limit exceptions, helping you pinpoint recurring issues and potential areas for optimization.

By proactively monitoring your API requests, you can detect issues with rate limiting early on and take appropriate action to avoid disruptions to your application's functionality.

Handling API Rate Limits#

When your application hits an API rate limit, swift and effective handling is essential to maintain functionality. Fortunately, several strategies can help you navigate these situations gracefully.

One common approach is to retry the request with exponential backoff. This involves waiting a short interval before retrying the request and gradually increasing the wait time with each subsequent attempt. This strategy is particularly effective because it reduces the load on the API server during peak times, allowing it to recover more quickly. You can leverage the HTTP response status codes returned by the API to fine-tune your retry logic. For example, a 503 ("Service Unavailable") status might warrant a longer backoff than a 429 ("Too Many Requests") status.

In addition to retry mechanisms, implementing rate limit handling directly in your code is a best practice. This can involve tracking the number of requests made within a specific time frame and adjusting your application's behavior accordingly. For instance, you might temporarily pause requests or implement a queueing system to distribute them more evenly. Consider using libraries or frameworks that provide built-in rate limit handling features, as they can simplify the implementation and ensure consistent behavior across your application.

Over 10,000 developers trust Zuplo to secure, document, and monetize their APIs

Learn MoreOptimizing API Requests#

Beyond simply handling rate limits, actively optimizing your API requests can significantly reduce the likelihood of encountering them in the first place.

Efficient API Request Design#

One key aspect of optimization is efficient API request design. This involves minimizing the number of requests needed to achieve a specific task. Instead of making multiple individual calls to fetch different pieces of data, consider whether you can consolidate these requests into a single API call. For example, if you need to retrieve information about multiple users, use a batch API endpoint to fetch data for all users in one go.

Another important aspect of request design is reducing the size of the payload for each request. Review the data you're requesting and ensure you're only retrieving what's necessary. Avoid fetching unnecessary fields or details you won't use in your application. By trimming down your payloads, you can reduce the amount of data transferred over the network and improve the overall efficiency of your API interactions.

Caching and Batching API Requests#

Caching and batching are powerful techniques that can further optimize your API usage. Caching involves storing the results of previous API calls and reusing them when the same data is needed again. This avoids redundant requests and significantly reduces the load on the API server. Consider implementing a caching layer in your application to store frequently accessed data for a specified duration to avoid making unnecessary requests.

Batching allows you to combine multiple requests into a single call. This is particularly useful when you need to perform similar operations on multiple resources. By batching your requests, you can reduce the number of round trips to the API server and improve the overall efficiency of your application.

API Request Strategies#

Beyond handling rate limits reactively, let's explore proactive strategies that can further optimize your API usage and reduce the chances of hitting those limits.

Using API keys and Authentication to Increase Rate Limits#

Many API providers offer tiered rate limits based on authentication and API key usage. By authenticating your requests, you often gain access to a higher rate limit than unauthenticated or anonymous users. This is because authentication helps providers identify and track your usage more accurately, allowing them to offer increased allowances based on your specific needs and usage patterns.

Leveraging API Provider-Specific Features#

Some API providers offer additional features designed to help you manage rate limits more effectively. One such feature is rate limit exemptions, which may be granted for specific use cases or high-priority applications. Another is burst limits, which allow you to temporarily exceed your normal rate limit for short durations to accommodate unexpected spikes in traffic. Investigating and utilizing these provider-specific features can provide valuable flexibility and help you avoid unnecessary rate limit errors.

Avoiding API Rate Limit Issues#

While handling rate limit errors is important, adopting a proactive approach to avoid them altogether is even better. Let's delve into some best practices for designing your API integration in a way that minimizes the risk of hitting those limits.

Designing for Scalability and Reliability#

When building your application, it's crucial to consider scalability and reliability. This means anticipating potential increases in API usage as your user base grows or your application's functionality expands. Design your architecture to accommodate higher request volumes by implementing load balancing, caching mechanisms, and efficient data retrieval strategies. By planning for scalability, you can ensure that your application remains responsive and avoids being rate limited even under heavy loads.

Continuously Monitoring and Optimizing API Performance#

Rate limits can change over time, and your application's usage patterns may as well. Continuous monitoring and optimization of your API performance are essential. Regularly review your API request logs, error reports, and performance metrics to identify potential bottlenecks or areas for improvement. Fine-tune your caching strategies, adjust request frequencies, and explore alternative API endpoints or providers if necessary. By staying vigilant and proactive, you can maintain a smooth and seamless API integration experience for your users.

Best Practices for API Integration#

Integrating with APIs effectively involves more than just handling rate limits. Here are some additional best practices to ensure a seamless experience:

APIs, like any software, can encounter errors or become temporarily unavailable. Implement robust error-handling mechanisms in your application to gracefully manage these situations. This includes retrying failed requests with appropriate back-off strategies, providing informative error messages to users, and logging errors for analysis and debugging.

API providers often release new versions of their APIs with updated features or changes to existing endpoints. Ensure your application is compatible with the latest API version to avoid unexpected errors or functionality changes. If you're using an older API version, consider migrating to the newer version to take advantage of improvements and ensure continued compatibility.

Rate Limiting With Zuplo#

At Zuplo, Rate Limiting is one of our most popular policies. Zuplo offers a programmable approach to rate limiting that allows you to vary how rate limiting is applied for each customer or request. Implementing truly distributed, high-performance Rate Limiting is difficult; our promise is that using Zuplo is cheaper and faster than doing this yourself. Here's precisely how to do it:

Add a rate-limiting policy#

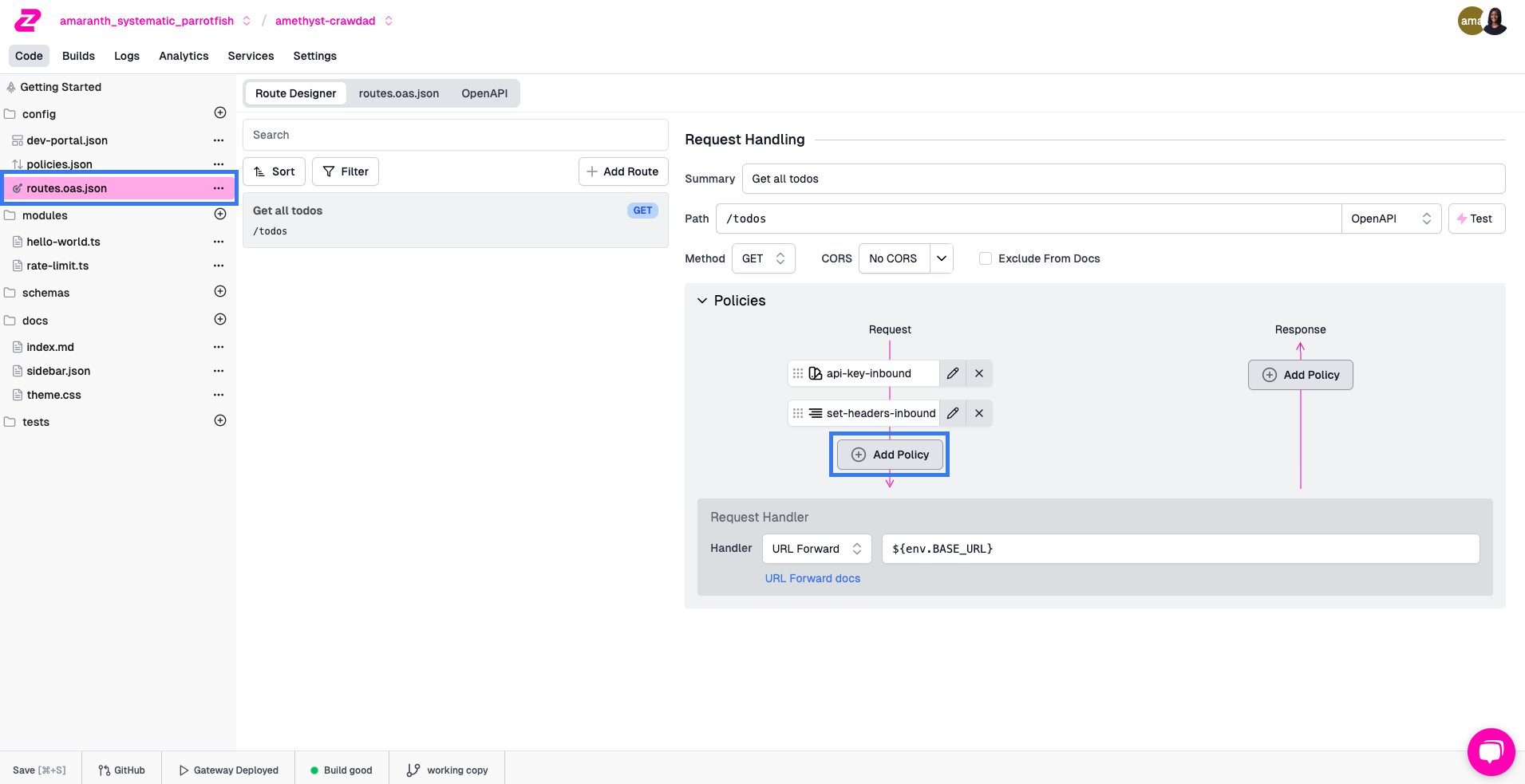

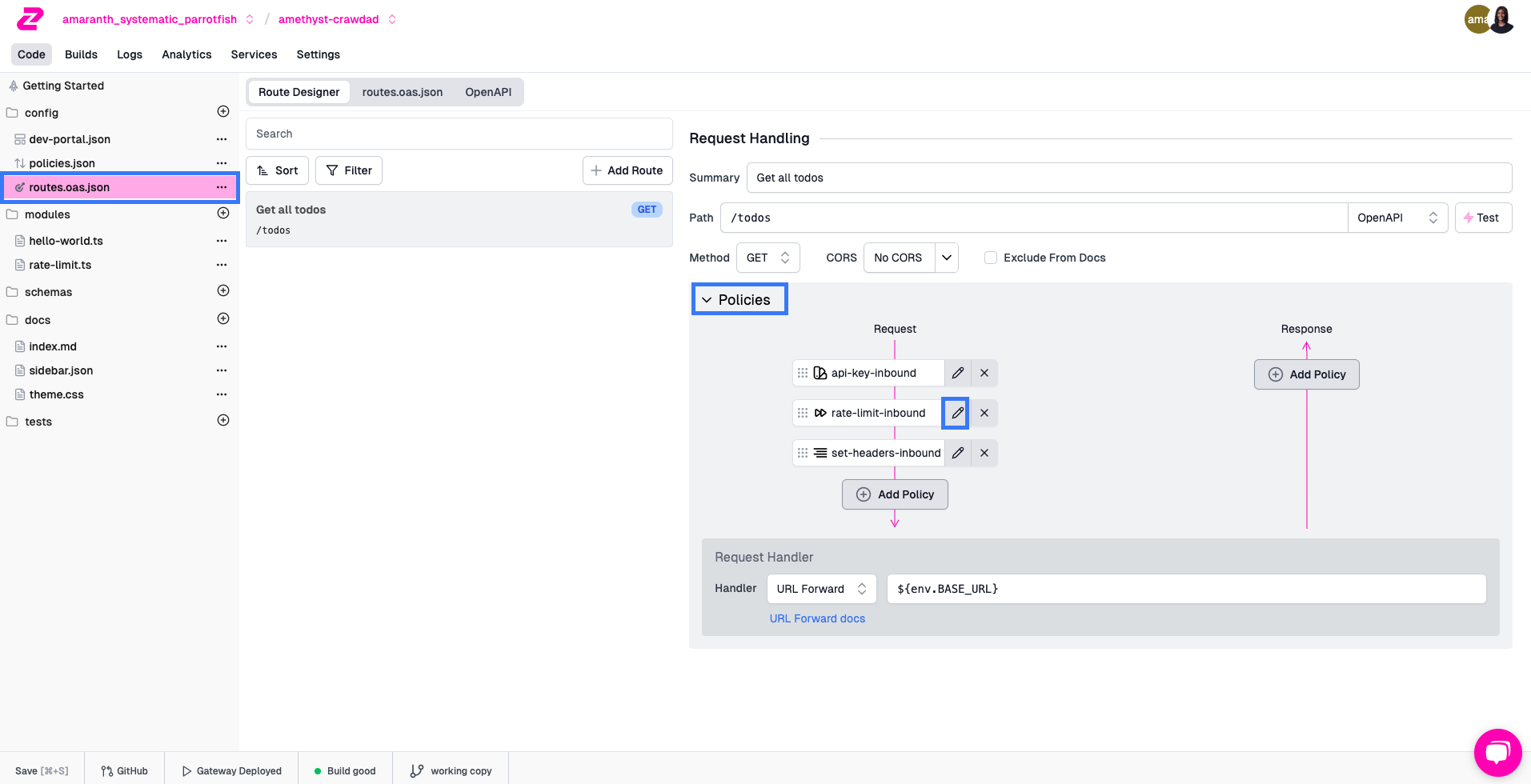

Navigate to your route in the Route Designer and click Add Policy on the request pipeline.

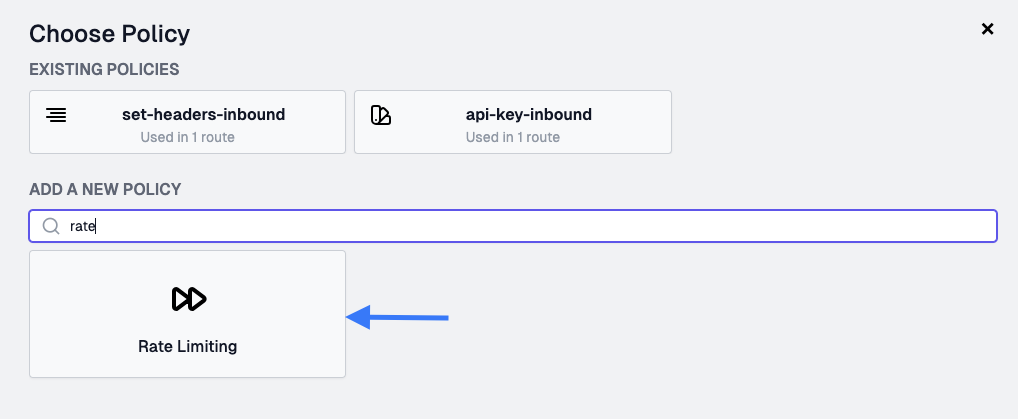

In the Choose Policy modal, search for the Rate Limiting policy.

If you're using API Key Authentication, you can set the policy to rateLimitBy user and allow 1 request every 1 minute.

{

"export": "RateLimitInboundPolicy",

"module": "$import(@zuplo/runtime)",

"options": {

"rateLimitBy":"user",

"requestsAllowed": 1,

"timeWindowMinutes": 1

}

}Now each consumer will get a separate bucket for rate limiting. At this point, any user that exceeds this rate limit will receive a 429 Too many requests.

Try dynamic rate-limiting#

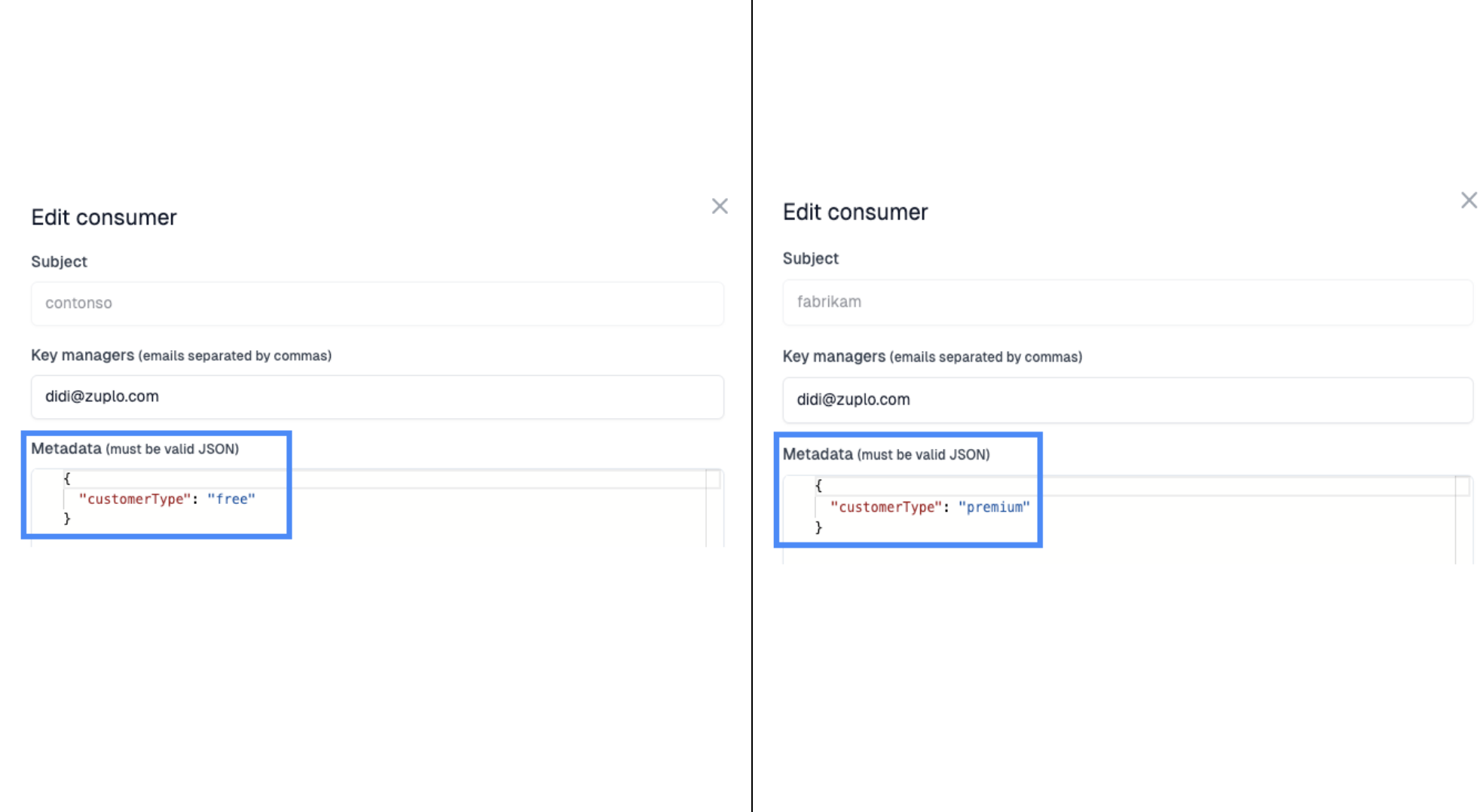

This time, we will make the rate-limiting policy more dynamic, based on properties of the customer. Update the metadata of your two API Key consumers to have a property customerType. Set one to free and another to premium.

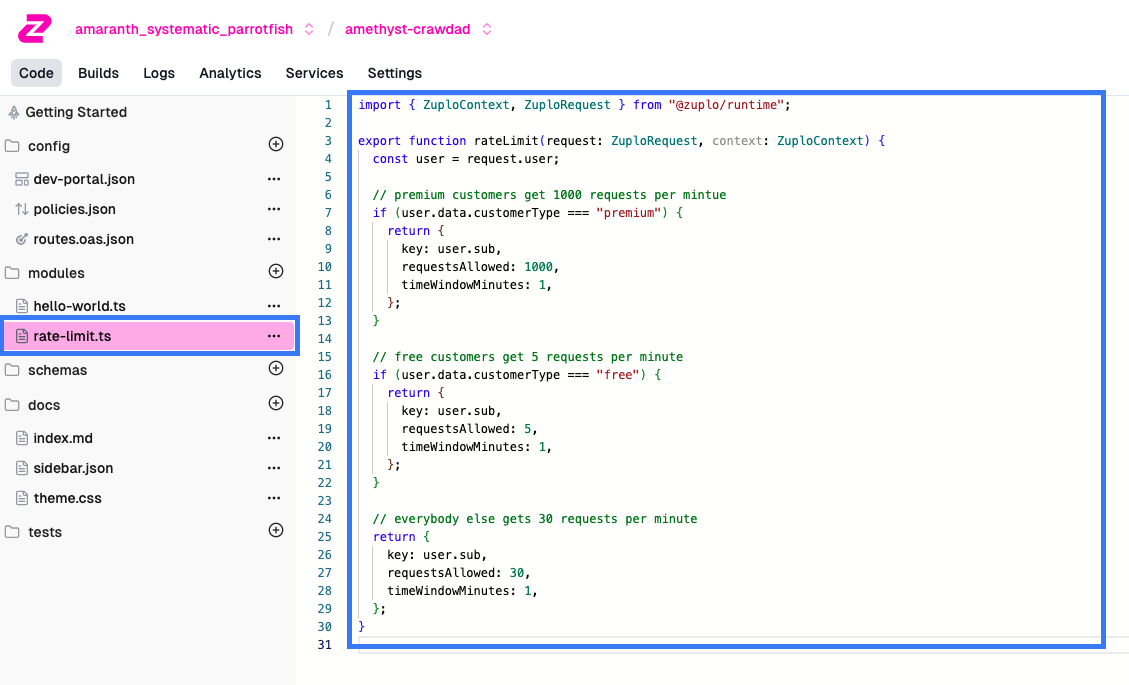

Now add a new module to the files section by clicking on the + next to the Modules folder and choose new empty module. Name this new module rate-limit.ts.

Add the following code to the module:

import { ZuploContext, ZuploRequest } from "@zuplo/runtime";

export function rateLimit(request: ZuploRequest, context: ZuploContext) {

const user = request.user;

// premium customers get 1000 requests per mintue

if (user.data.customerType === "premium") {

return {

key: user.sub,

requestsAllowed: 1000,

timeWindowMinutes: 1,

};

}

// free customers get 5 requests per minute

if (user.data.customerType === "free") {

return {

key: user.sub,

requestsAllowed: 5,

timeWindowMinutes: 1,

};

}

// everybody else gets 30 requests per minute

return {

key: user.sub,

requestsAllowed: 30,

timeWindowMinutes: 1,

};

}

Now, we'll reconfigure the rate-limit policy to wire up our custom function. Find the policy in the Route Designer and click edit.

Update the configuration with the following config pointing at our custom rate limit function:

{

"export": "RateLimitInboundPolicy",

"module": "$import(@zuplo/runtime)",

"options": {

"rateLimitBy": "function",

"requestsAllowed": 2,

"timeWindowMinutes": 1,

"identifier": {

"export": "rateLimit",

"module": "$import(./modules/rate-limit)"

}

}

}This identifies our rate-limit module and the function rateLimit that it exports. At this point, our dynamic rate limit policy is up and ready to use!

Conclusion#

Navigating the complexities of API rate limits is an unavoidable challenge in modern software development. However, with a solid understanding of their purpose, types, and effective management strategies, you can build applications that gracefully handle these limitations.

By proactively monitoring API usage, implementing retry mechanisms, optimizing request design, and leveraging provider-specific features, you can minimize the impact of rate limits on your application's functionality and user experience.

With tools like Zuplo, implementing robust rate-limiting strategies becomes even more accessible and easy. Zuplo's flexible and customizable rate-limiting features empower you to define and enforce rate limits tailored to your specific needs, providing an extra layer of protection for your backend services. Want to enforce API rate limits on your APIs? Sign up for Zuplo to implement rate limiting in minutes across all your APIs.