Building a PostgREST API For Your MySQL Database

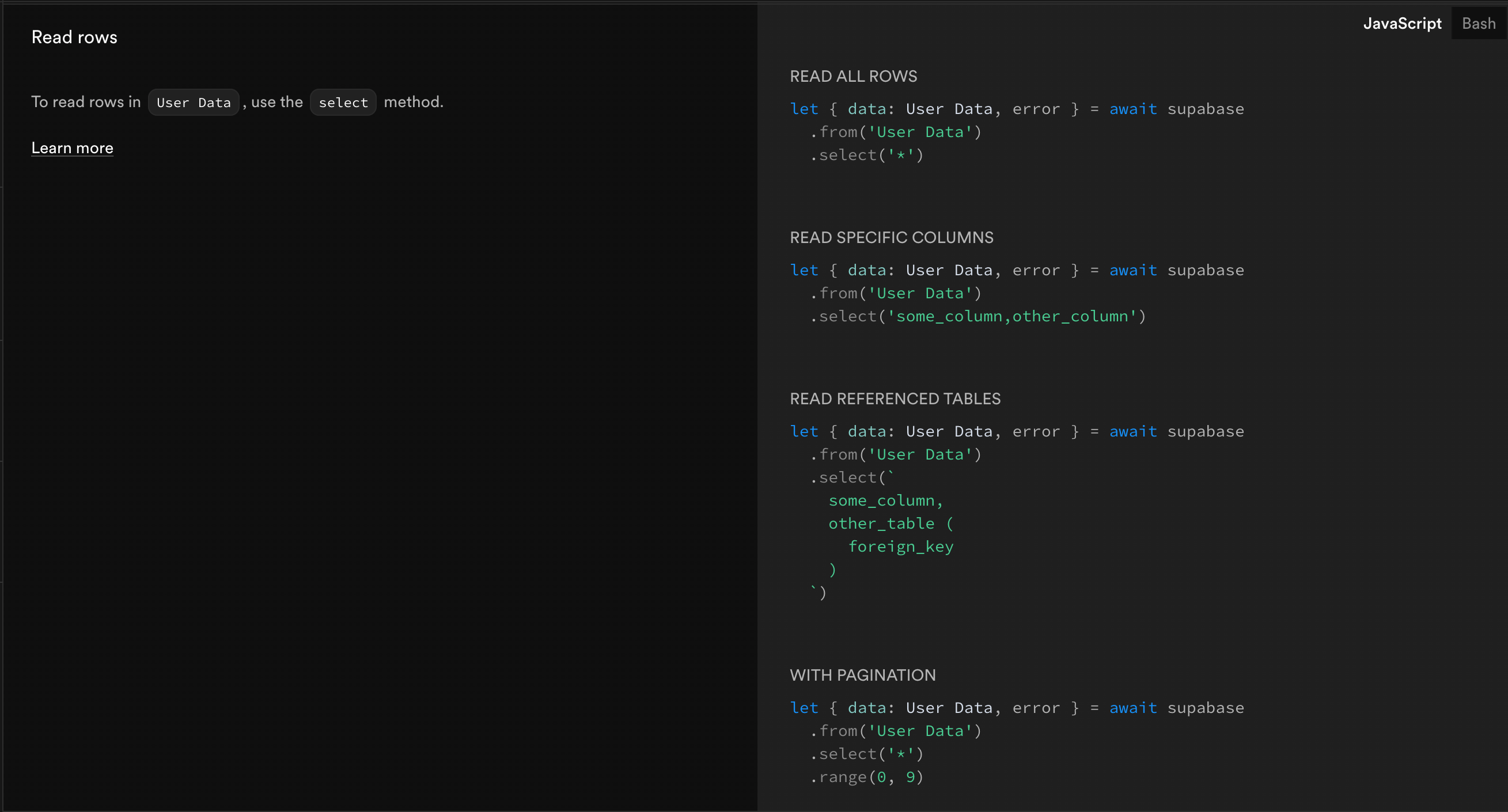

Supabase is great for quickly spinning up a Postgres DB and building a POC. It's

truly a product that has mapped out the entire developer workflow, really honing

in on how devs are using the product and what features they can build to

accommodate. One of my favorite features of Supabase is that they offer a

REST API and

Javascript SDK

for interacting with your database, making it easy to quickly start querying

data from your application. In fact, I've used extensively it for

many samples.

Unfortunately, not all of us can use Supabase or even Postgres at work. Acccording to this database popularity ranking - many of you are using MySQL, and have never experienced what I am talking about. I decided to challenge myself to try and recreate the Supabase experience for MySQL. If you'd like to skip to the final result, check out the repository.

Table of Contents#

- Choosing a DB Platform

- Using PostgREST to Build a Postgres API

- Constructing PostgREST Queries using postgrest-js

- Hosting Your MySQL API

- Creating a PostgREST-Compatible API with NextJS

- Making Queries

- (Optional) API Gateway

- Wrapping-Up

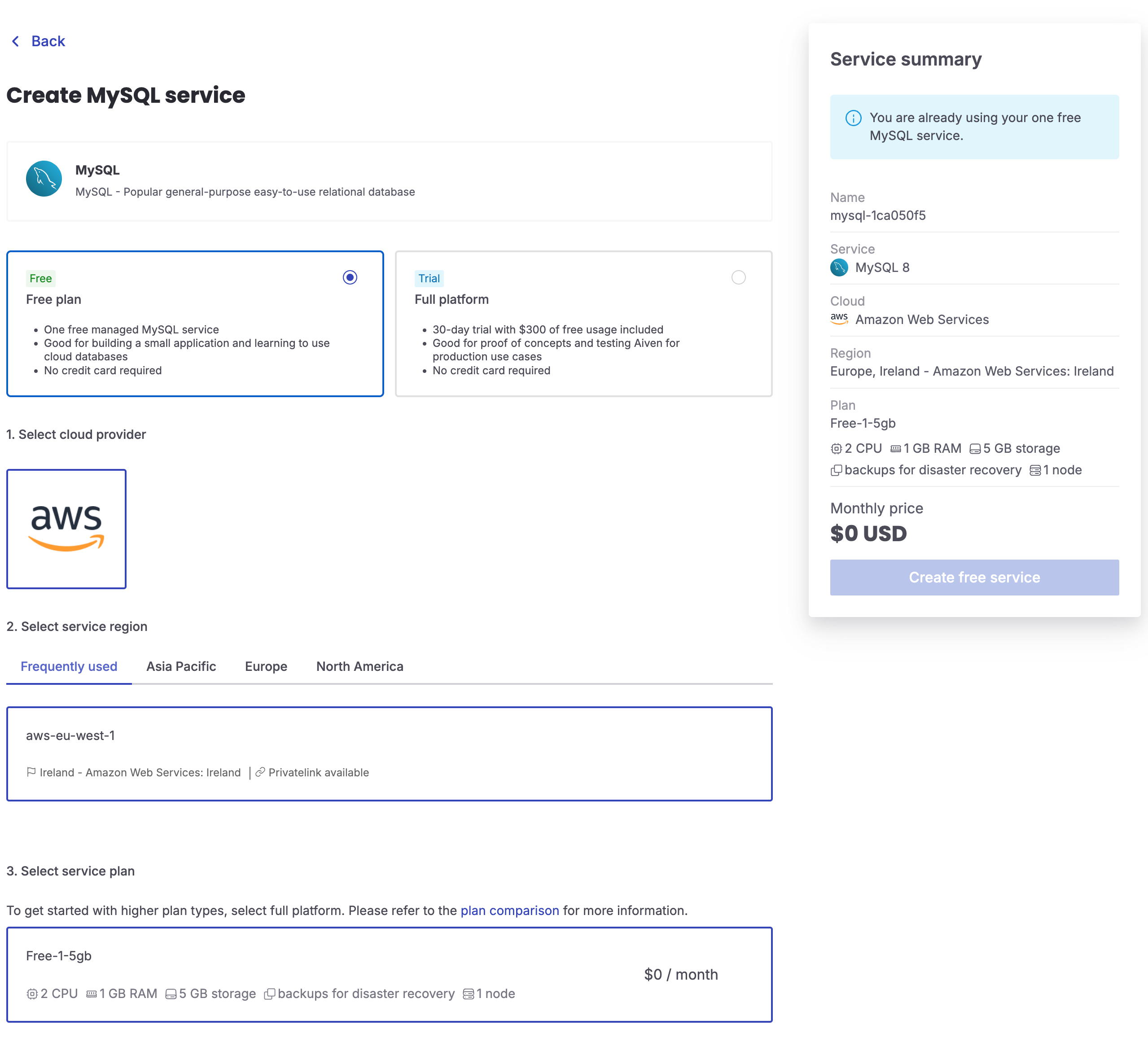

Choosing a DB Platform#

You likely already have MySQL running somewhere on the cloud, but in case you don't - I'd recommend using a platform with a free tier. For production workloads where you're cost sensitive, AWS MySQL is also an option - but I don't want to deal with setting that up. For this tutorial, I am going to use Aiven. Creating a project is fairly straightforward.

Your database will actually be hosted on AWS using Aiven's free tier, which is handy to gauge production latency.

Using PostgREST to Build a Postgres API#

If you read through Supabase's REST API documentation, you'll find that the backend is actually built using a project called PostgREST. PostgREST is a web server that creates a REST API over any Postgres database. It has a specific query structure for translating query parameters into Postgres queries. I'll get into how we will handle these queries in MySQL later.

GET /rest/v1/books?select=title,description&description=ilike.*cheese*&order=title.desc&limit=5&offset=10 HTTP/1.1

Host: localhost:54321Supabase offers a playground for learning the syntax if you'd like to see it in action. You might find constructing these queries difficult on your client.

Constructing PostgREST Queries using postgrest-js#

Thankfully, Supabase already provided a PostgREST client library for constructing queries from your front-end. It seems to have the exact same syntax as the Supabase JS library - which helps us immensely in our mission to recreate their devx.

import { PostgrestClient } from "@supabase/postgrest-js";

const REST_URL = "http://localhost:3000";

const postgrest = new PostgrestClient(REST_URL);

const { data, error } = await postgrest.from("countries").select();

Over 10,000 developers trust Zuplo to secure, document, and monetize their APIs

Learn MoreHosting Your MySQL API#

Alright, so now we have a database, PostgREST server library, and a query client. If we were using a Postgres database, you could simple go to Digital Ocean, deploy the server and frontend to droplets. Unfortunately, we can't use PostgREST directly here as MySQL and Postgresql syntaxes are not entirely compatible. Instead, we have to host our own API that will translate PostgREST syntax to MySQL queries. Luckily, our friends over at Subzero.cloud created a library that does just that.

I am going to challenge myself to build this API using tools a typical web hobbyist (ie. the typical Supabase dev) would know - namely Typescript + NodeJS, Vercel for hosting, and NextJS as my client and server framework. This approach will be fully serverless, so you won't need to manage any infrastructure besides your database.

Creating a PostgREST-Compatible API with NextJS#

Going off of Subzero's example - we will just need a handful of dependencies

npm install @subzerocloud/rest @supabase/postgrest-js copy-webpack-plugin mariadb dotenv@subzerocloud/restis the lib that will translate the PostgREST queries into MySQL@supabase/postgrest-jsas mentioned abovemariadbas the driver for accessing my MySQL databasedotenvfor environment variables to configure the DB connectioncopy-webpack-pluginto fix a bug in NextJS handling wasm files (see thenext.config.tsin the sample repo)

API Boilerplate#

In your NextJS repo, create a file called route.ts under

src/app/api/[...query].

Once you've created the file, the first step is to add some boilerplate for handling the various REST verbs.

import { SubzeroError } from "@subzerocloud/rest";

async function handler(request: Request, method: Method)

if (!["GET", "POST", "PUT", "DELETE", "PATCH"].includes(method)) {

throw new SubzeroError(`Method ${method} not allowed`, 400);

}

}

async function handlerWrapper(request: Request, method: Method) {

try {

return await handler(request, method);

} catch (e) {

if (e instanceof SubzeroError) {

console.log("SubzeroError:", e);

return new Response(e.toJSONString(), {

status: e.status || 500,

headers: { "content-type": "application/json" },

});

} else {

console.log("Error:", e);

return new Response((e as Error).toString(), { status: 500 });

}

}

}

export const GET = async (request: Request) =>

await handlerWrapper(request, "GET");

export const PUT = async (request: Request) =>

await handlerWrapper(request, "PUT");

export const POST = async (request: Request) =>

await handlerWrapper(request, "POST");

export const PATCH = async (request: Request) =>

await handlerWrapper(request, "PATCH");

export const DELETE = async (request: Request) =>

await handlerWrapper(request, "DELETE");

export async function OPTIONS() {

return new Response(null, {

status: 204,

headers: {

"access-control-allow-origin": "*",

"access-control-allow-methods": "GET, POST, PUT, DELETE, PATCH",

"access-control-allow-headers": "Content-Type, Prefer",

},

});

}The code above will handle any calls to /api/{table_name}.

Initializing Subzero#

Now lets get a database connection going. First - we will need to manage our

permissions/access to the database. At the same level as your route, create a

permissions.json file

[

{

"table_schema": "<YOUR_DB_NAME>",

"table_name": "<YOUR_DB_NAME>",

"role": "admin",

"grant": ["all"],

"using": [{ "sql": "true" }]

}

]We are creating an admin role here that will have access to the DB. Now let's

go back to route.ts.

import Subzero, {

SubzeroError,

Env as QueryEnv,

Method,

getIntrospectionQuery,

fmtMySqlEnv,

} from "@subzerocloud/rest";

import mysql, { PoolConfig } from "mariadb";

import { resolve } from "path";

import { readFileSync, existsSync } from "fs";

const urlPrefix = "/api";

const schema = process.env.DATABASE_NAME!;

const dbType = "mysql";

export const dynamic = "force-dynamic";

let subzero: Subzero;

const role = "admin"; // Set according to permissions.json

const connectionParams: PoolConfig = {

connectionLimit: 75,

connectTimeout: 5 * 1000,

insertIdAsNumber: true,

bigIntAsNumber: true,

port: parseInt(process.env.DATABASE_PORT!),

host: process.env.DATABASE_HOST,

user: process.env.DATABASE_USERNAME,

password: process.env.DATABASE_PASSWORD,

allowPublicKeyRetrieval: true,

trace: true,

database: schema,

ssl: {

rejectUnauthorized: true,

ca: process.env.DATABASE_CA_CERTIFICATE,

},

};

// WARNING! do not use this connection pool in other routes since the

// connections hold special user defined variables that might interfere with

// other queries

const subzeroDbPool = mysql.createPool(connectionParams);

async function introspectDatabaseSchema() {

const permissionsFile = resolve(

process.cwd(),

"src/app/api/[...query]/permissions.json",

);

// eslint-disable-next-line @typescript-eslint/no-explicit-any

let permissions: any[] = [];

if (existsSync(permissionsFile)) {

permissions = JSON.parse(readFileSync(permissionsFile, "utf8"));

} else {

console.error("permissions file not found", permissionsFile);

}

const { query, parameters } = getIntrospectionQuery(

dbType,

[schema], // the schema/database that is exposed to the HTTP api

// the introspection query has two 'placeholders' to adapt to different configurations

new Map([

["relations.json", []], // custom relations - empty for now

["permissions.json", permissions],

]),

);

const db = await mysql.createConnection(connectionParams);

const result = await db.query(query, parameters);

const dbSchema = result[0].json_schema;

await db.end();

return dbSchema;

}

// Initialize the subzero instance that parses and formats queries

let initializing = false;

async function initSubzero() {

if (initializing) {

return;

}

initializing = true;

let wait = 0.5;

let retries = 0;

const maxRetries = 3; // You can adjust this or make it configurable

while (!subzero) {

try {

const dbSchema = await introspectDatabaseSchema();

subzero = new Subzero(dbType, dbSchema);

} catch (e) {

const message = e instanceof Error ? e.message : e;

retries++;

if (maxRetries > 0 && retries > maxRetries) {

throw e;

}

wait = Math.min(10, wait * 2); // Max 10 seconds between retries

console.error(

`Failed to connect to database (${message}), retrying in ${wait} seconds...`,

);

await new Promise((resolve) => setTimeout(resolve, wait * 1000));

}

}

initializing = false;

}

async function handler(request: Request, method: Method) {

...

// initialize the subzero instance if it is not initialized yet

if (!subzero) {

await initSubzero();

}

}This code essentially creates a connection to your database for Subzero to

"introspect" it, to understand the schema (ex. columns, types, etc.). Access is

governed by the permissions.json we created earlier.

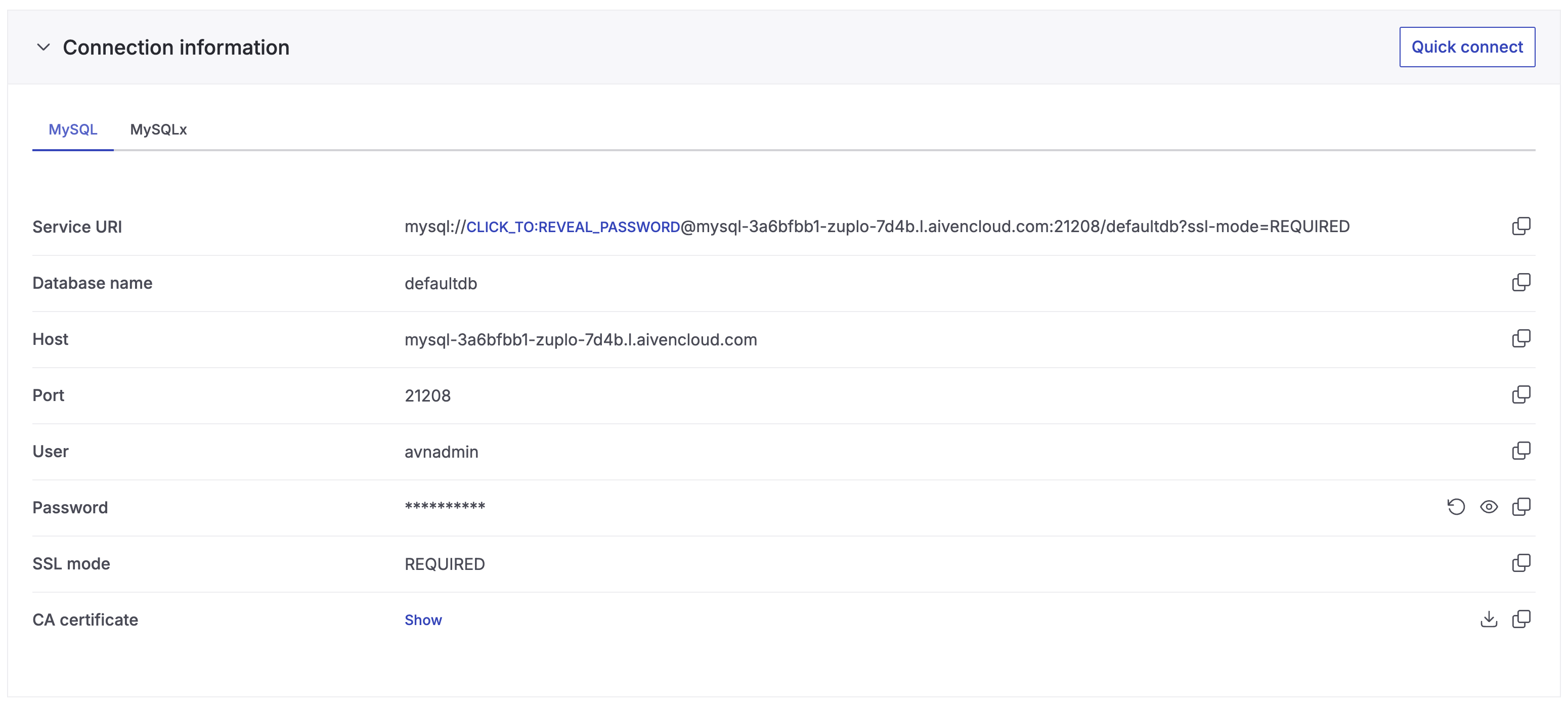

Setting Environment Variables#

Now is a good time for you to create a .env file and start filling in the

variables in the code above. If you are using Aiven, you can find them all on

your service's homepage:

Executing the Query#

Let's finish up the handler function with the code below

async function handler(request: Request, method: Method) {

...

const queryEnv: QueryEnv = [

["role", role],

["request.method", method],

["request.headers", JSON.stringify(request.headers)],

[

"request.get",

JSON.stringify(Object.fromEntries(new URL(request.url).searchParams)),

],

["request.jwt.claims", JSON.stringify({})],

];

const { query: envQuery, parameters: envParameters } = fmtMySqlEnv(queryEnv);

const db = await subzeroDbPool.getConnection();

// eslint-disable-next-line @typescript-eslint/no-explicit-any

let result: any;

try {

await db.query("BEGIN");

await db.query(envQuery, envParameters);

if (method === "GET") {

const { query, parameters } = await subzero.fmtStatement(

schema,

`${urlPrefix}/`,

role,

request,

queryEnv

);

const rows = await db.query(query, parameters);

result = rows[0].body ? JSON.parse(rows[0].body) : null;

} else {

const statement = await subzero.fmtTwoStepStatement(

schema,

`${urlPrefix}/`,

role,

request,

queryEnv

);

const { query: mutate_query, parameters: mutate_parameters } =

statement.fmtMutateStatement();

const rows = await db.query(mutate_query, mutate_parameters);

const { insertId, affectedRows } = rows;

if (insertId > 0 && affectedRows > 0) {

const ids = Array.from(

{ length: affectedRows },

(_, i) => insertId + i

);

statement.setMutatedRows(ids);

} else {

const idRows = await db.query(`

select t.val

from json_table(

@subzero_ids,

'$[*]' columns (val integer path '$')

) as t

left join json_table(

@subzero_ignored_ids,

'$[*]' columns (val integer path '$')

) as t2 on t.val = t2.val

where t2.val is null;

`);

statement.setMutatedRows(idRows);

}

const returnRepresentation = request.headers

.get("Prefer")

?.includes("return=representation");

if (returnRepresentation) {

const { query: select_query, parameters: select_parameters } =

statement.fmtSelectStatement();

const selectResult = await db.query(select_query, select_parameters);

result = selectResult[0].body ? JSON.parse(selectResult[0].body) : null;

}

}

await db.query("COMMIT");

} catch (e) {

await db.query("ROLLBACK");

throw e;

} finally {

await db.end();

}

return new Response(JSON.stringify(result), {

status: 200,

headers: {

"Content-Type": "application/json",

},

});

}This is a little bit hairy - and you don't need to understand all of it. Essentially Subzero is reading properties of the request to create a MySQL statement. If the request is a GET, it simply executes that request. If it is a mutation, then a two-step mutation is performed with errors causing a rollback. With this last addition, we have a functioning MySQL PostgREST API that you can push to Vercel!

Making Queries#

Now that we have an API endpoint running, you can use the postgres.js library

to start making requests from your NextJS frontend. You can create a simple

page.tsx at the root of your project for this.

"use client";

import { PostgrestClient } from "@supabase/postgrest-js";

const REST_URL = `${process.env.NEXT_PUBLIC_API_URL}/api`;

export default function Home() {

const performFetch = async () => {

const postgrest = new PostgrestClient(REST_URL);

const { data, error } = await postgrest

.from("products")

.select("*")

.order("id", { ascending: false });

if (data) {

console.log(data);

}

if (error) {

console.error(JSON.stringify(error, null, 2))

}

};

return (

<button

onClick={performFetch}

>

Fetch Table Data

</button>

);

}

If you'd like to see this in action, check out my demo application.

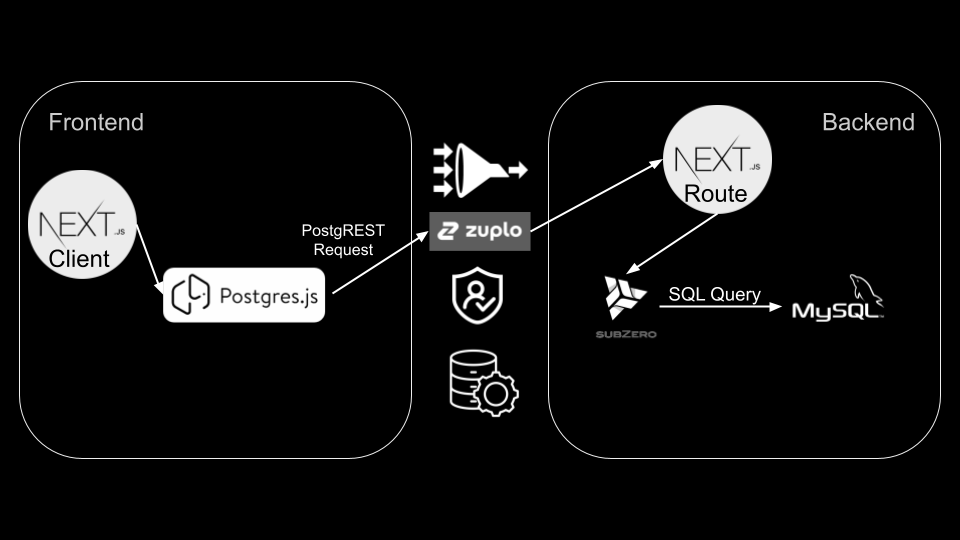

(Optional) API Gateway#

Before exposing an API publicly, I'd typically recommend you put it behind an API gateway (ex. Zuplo). Some features you might find valuable include:

- Rate Limiting requests

- Caching DB fetches

- Authentication

Setup is pretty straightforward, simply clone and run

this repo, and change

your process.env.NEXT_PUBLIC_API_URL to match your gateway's URL.

Wrapping-Up#

Congratulations, you now have a serverless MySQL REST API with a Supabase developer experience! All of the code can be found in this repo so you can run it yourself. All of the services used above have generous free tiers, so this is a good choice for hobby projects.

If you are not a fan of using Typescript/Node as your backend - take a look at this PHP alternative which you can likely run on Vercel's PHP runtime to keep everything in a monorepo.

If you would prefer a more automated solution - check out these options.

Lastly, if you want to be able to customize your CRUD APIs while still matching your database table schemas - check out how to generate OpenAPI from your database.