How API Developers Can Use Edge Computing to Optimize API Performance

Your users expect lightning-fast responses when they interact with your APIs. But when those requests travel across continents to distant data centers, even milliseconds matter. Edge computing brings computation closer to where data is created, dramatically improving API performance, reducing costs, and creating more responsive user experiences.

According to Gartner, by 2025, 75% of enterprise-generated data will originate outside of centralized data centers. This massive shift makes edge computing increasingly relevant for modern API development. Understanding edge computing best practices is essential for staying ahead. Despite its benefits, only 27% of organizations have implemented edge computing, while 54% are still exploring its potential.

The good news? By understanding and implementing edge principles in your API architecture, you can break free from the limitations of traditional centralized models. Let's dive into how edge computing can transform your API performance and user experience.

- What Is Edge Computing and Why Should API Developers Care?

- Performance Benefits That Transform User Experience

- Breaking Through Roadblocks: How Edge Computing Clears the Path

- Cloud vs. Edge: Creating Your Perfect Mix

- Edge in Action: Real People Solving Real Problems

- API Developer's Playbook: Winning Strategies for Edge Deployment

- The Future is Edge: What's Coming Next for API Developers

What Is Edge Computing and Why Should API Developers Care?#

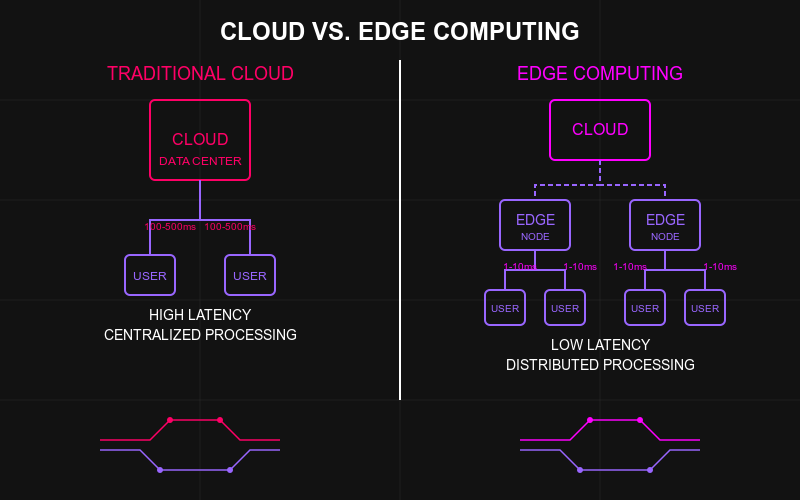

Edge computing flips the traditional computing model on its head. Instead of sending all data to distant cloud servers for processing, edge computing brings the processing power closer to where data originates.

Beyond the Central Data Center#

Think of edge computing as moving the intelligence to where it's most needed. Rather than routing every API request through a central hub, edge computing creates a distributed network of processing nodes positioned strategically close to users and data sources.

This decentralized approach addresses three critical network challenges:

- Bandwidth limitations: By processing API requests locally, you maximize bandwidth efficiency and reduce strain on broader networks.

- Latency reduction: When your API endpoints operate closer to users, you dramatically reduce data travel time, resulting in near-instantaneous responses.

- Congestion mitigation: The global internet infrastructure faces constant congestion as billions of devices exchange data. Edge computing alleviates this by handling API requests at local points and applying API rate limit strategiesto manage traffic efficiently.

These are particularly critical for time-sensitive applications like financial trading platforms, autonomous vehicles, or real-time analytics dashboards.

Serverless at the Edge#

For API developers specifically, edge computing enables serverless architectures that, when combined with the right features of an API gateway, offer significant advantages:

- Automatic resource allocation based on demand

- Reduced operational costs through pay-per-use models

- Improved regional compliance by keeping data processing local

Tools like Zuplo facilitate API development in serverless environments. Rather than maintaining constantly running server infrastructure, your API processing can scale efficiently at the edge, activating only when needed.

Performance Benefits That Transform User Experience#

When it comes to API performance, processing data closer to its source creates significant advantages that translate directly to better user experiences.

Reduced Latency: Speed That Users Can Feel#

When API endpoints operate at the network edge, the performance impact is immediate and tangible:

- Gaming and AR applications become more immersive with minimal lag

- E-commerce transactions feel instantaneous, reducing cart abandonment

- Financial services can process trades and transactions faster, creating competitive advantage

Every millisecond saved in API response time improves user retention. According to Amazon, just a 100ms delay in website load time can reduce conversion rates by 1%. For APIs powering critical applications, these performance gains directly impact business outcomes. Therefore, deploying APIs on edge networks is crucial for enhancing performance and user experience.

Implementing edge computing is an effective strategy to increase API performance.

Improved Reliability When the Central Cloud Falters#

Edge computing enhances API reliability by reducing dependency on central infrastructure.

- Continued functionality during outages: Edge-processed APIs can continue to function even when central cloud systems experience downtime.

- Real-time processing capabilities: Data can be processed instantly near its origin, allowing for immediate reactions to incoming information.

- Offline operation: Edge computing ensures that APIs remain functional during connectivity disruptions by storing and processing data locally, then syncing with backend systems once connections are restored.

These capabilities are particularly valuable for mission-critical systems where continuous operation is non-negotiable.

Cost Efficiency That Scales With Usage#

Moving API processing to the edge creates significant cost advantages:

- Reduced bandwidth usage: By processing data locally, edge computing minimizes the amount of information transmitted over networks to centralized servers.

- Optimized resource allocation: Computing resources at the edge promote more efficient utilization of processing power.

- Lower network infrastructure costs: Processing and storing data locally alleviates network congestion and decreases bandwidth demand.

Embracing the advantages of hosted API gateways can further reduce operational costs and simplify deployment. For data-intensive APIs, these savings can substantially reduce operational costs while improving performance—a rare win-win in technology implementation.

Breaking Through Roadblocks: How Edge Computing Clears the Path#

The problems that plague traditional cloud systems don't just disappear on their own. Edge computing offers practical solutions to frustrations that developers have struggled with for years.

Saying Goodbye to Network Bottlenecks#

Remember the last time a video call froze or an app timed out? That's what happens when networks get overwhelmed:

- Bandwidth Bottlenecks: Imagine trying to push an ocean through a garden hose. That's what happens when huge data loads hit limited network capacity. Edge computing keeps most data local, so only what's truly needed travels across your network.

- The Distance Problem: When data needs to travel thousands of miles to a data center and back, delays are inevitable. Edge computing cuts this journey dramatically—like having a conversation with someone in the same room instead of shouting across a football field.

- Traffic Jams in the Cloud: When millions of devices all try to talk to the same cloud servers simultaneously, digital gridlock happens. Edge computing creates local processing "neighborhoods" that handle traffic before it hits the main highways. Implementing rate limiting in distributed systems further helps prevent bottlenecks and ensures stable performance.

Over 10,000 developers trust Zuplo to secure, document, and monetize their APIs

Learn MoreKeeping Regulators Happy Without the Headaches#

Data regulations keep getting stricter, but edge computing turns compliance from a nightmare into a natural part of your architecture:

- Data Stays Home: When user data never leaves its country of origin, you sidestep the whole maze of international data transfer regulations. Edge computing keeps processing local, so you can tell regulators "yes, we're compliant" without breaking a sweat.

- Privacy By Design: Instead of collecting everything and hoping for the best, edge computing lets you process sensitive data where it's created. Only send the insights, not the raw personal information.

- Right-Sized Security: Different regions have different security requirements. Edge computing lets you tailor your approach to each market without rebuilding your entire infrastructure.

Edge computing enables efficient data handling by processing data locally and sending only necessary information to central servers.

When Things Go Wrong (And They Always Do)#

The internet isn't perfect, and neither is your cloud connection. Edge computing gives you backup plans for your backup plans:

- Works With or Without Internet: When your connection drops, edge systems keep running, storing what they need locally until the connection returns.

- Independent Intelligence: Each edge node can make its own decisions using pre-defined rules or machine learning, no phone home required.

- No Single Point of Failure: Traditional systems crash when their central server goes down. With edge computing, losing one node is like losing one bee from the hive—the system keeps buzzing along.

Cloud vs. Edge: Creating Your Perfect Mix#

Not every API needs the same home. Sometimes, the cloud is perfect, other times, the edge makes more sense, and often, the best solution is a bit of both.

When to Send Your APIs to the Edge#

The cloud is amazing for heavy number-crunching, storing years of data, and handling tasks that can wait a few extra milliseconds. But there are times when your APIs need something different:

- When Every Millisecond Counts: For gaming, trading platforms, or AR applications, users notice even tiny delays. Edge APIs deliver nearly instant responses because they're right in the neighborhood.

- When the Internet Gets Spotty: In retail stores, warehouses, or remote locations, internet connections aren't always reliable. Edge APIs keep working even when the cloud connection takes a vacation.

- When Data Laws Get Strict: Some countries insist that certain data never leaves their borders. Edge APIs process sensitive information locally, keeping regulators happy without sacrificing performance.

- When Bandwidth Costs Add Up: Sending every bit of data to the cloud gets expensive fast. Edge APIs filter the flood, sending only what's truly needed upstream.

Mixing Edge and Cloud: Getting the Best of Both Worlds#

The smartest API strategies don't choose between edge and cloud—they use each where it makes the most sense:

Cloud Strengths:

- Handles complex analytics that need serious computing muscle

- Makes updates and versioning simpler (change once, deploy everywhere)

- Scales up to handle massive, unexpected traffic spikes

Edge Strengths:

- Delivers lightning-fast responses to local users

- Keeps working when internet connections get flaky

- Processes sensitive data without sending it across the globe

Integrating an API gateway maximizes API gateway performance benefits in hybrid cloud-edge deployments. Modern self-driving cars show this hybrid approach in action—critical safety decisions happen immediately at the edge (braking for pedestrians can't wait for a round trip to the cloud), while route planning and software updates come from the central cloud. Your APIs can follow the same playbook.

Edge in Action: Real People Solving Real Problems#

Edge computing isn't just theoretical—it's already transforming how industries operate. Let's look at how real organizations are using edge APIs to solve everyday challenges.

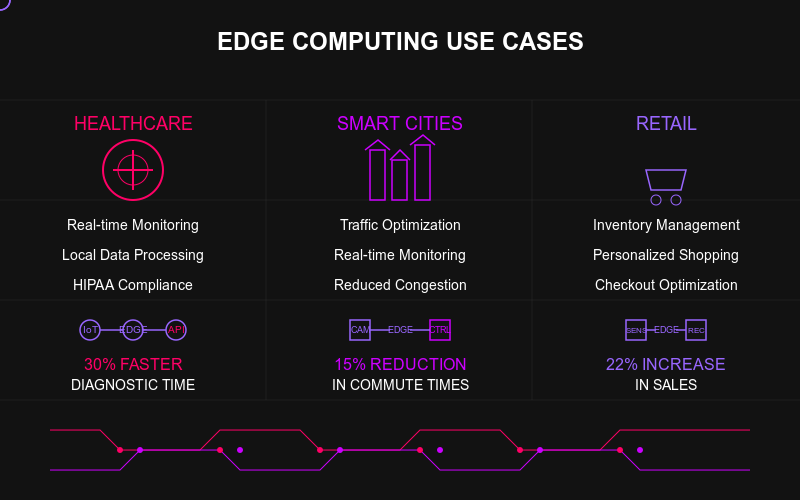

Healthcare: When Seconds Save Lives#

In hospitals and clinics around the world, edge computing is turning data into life-saving actions:

- Smart Devices That Don't Need to Phone Home: Modern insulin pumps don't wait for cloud permission to adjust dosage—they make real-time decisions right at the edge, giving patients tighter control of their blood sugar levels.

- Spotting Problems Before They're Visible: Edge-powered imaging systems can analyze X-rays and scans on-site, flagging potential issues for radiologists in seconds rather than waiting for cloud processing.

- Keeping Patient Data Where It Belongs: With privacy regulations like HIPAA growing stricter, edge computing processes sensitive information locally, reducing the risk of exposing protected health information during transmission.

By moving AI analysis to edge devices instead of waiting for cloud processing, healthcare can get patients from scan to treatment plan way faster.

Smart Cities: Making Traffic Flow, Not Stop#

Cities are using edge computing to make urban life smoother for everyone:

- Lights That Actually Understand Traffic: New York City's smart traffic system doesn't just follow pre-set patterns—edge devices analyze camera feeds in real-time to adjust signal timing based on actual conditions.

- Transit That Adapts to Demand: Public transportation systems use edge computing to track passenger loads, adjust schedules on the fly, and keep riders informed with real-time updates that don't depend on central servers.

- Shipments That Never Get Lost: Logistics companies embed edge devices in vehicles and warehouses, tracking everything from temperature to location without constant cloud connectivity.

The lesson? Smarter infrastructure doesn't always need more roads—just more intelligent control systems.

Retail: Making Shopping Personal Again#

Brick-and-mortar stores are fighting back against e-commerce with edge-powered experiences:

- Inventory That Counts Itself: Smart shelves with embedded sensors and edge processing alert staff to low-stock or misplaced items without waiting for central system updates.

- Personalized Experiences Without the Creepy Factor: Edge devices can recognize returning customers and tailor recommendations based on previous purchases—all without sending identifying information to the cloud.

- Checkout That Doesn't Make You Wait: Advanced systems monitor lines and open new registers automatically when needed, keeping stores running smoothly even during peak hours.

API Developer's Playbook: Winning Strategies for Edge Deployment#

Just knowing about edge computing isn't enough—you need practical approaches to make it work for your APIs. Here's how successful developers are maximizing their edge advantage.

Design for Speed From Day One#

When building edge-optimized APIs, performance starts with thoughtful design:

- Location Awareness That Makes Sense: Smart edge APIs automatically route requests to the nearest node, putting your processing power right where your users need it.

- Streamlined Payloads That Fly: Every unnecessary byte slows things down. Trim your API responses to include only what's essential—your mobile users with spotty connections will thank you.

- Compression That Actually Helps: The right compression can speed up transmission without bogging down processing. Choose techniques that balance size reduction with processing demands.

Smart design decisions —>> real performance gains.

Lock It Down Without Slowing It Down#

Distributed systems need smart security approaches that don't sacrifice speed:

- Trust Nothing, Verify Everything: In edge environments, traditional perimeter security falls apart. Adopt zero-trust principles that verify every request, regardless of where it comes from.

- Encrypt Everything, Everywhere: When your data lives and moves across multiple edge locations, encryption isn't optional—it's essential for both data in transit and at rest.

- Authentication That Doesn't Get in the Way: Strong security doesn't have to mean clunky user experiences. Implement context-aware authentication that adjusts requirements based on risk levels.

Implementing robust security in edge computing is crucial to protect data in decentralized environments. Remember Twilio's breach where attackers exploited an unsecured API endpoint and gained access to 33 million phone numbers? That's exactly the kind of nightmare good edge security prevents.

Plan for When Things Break (Because They Will)#

The edge isn't perfect, and neither are the connections to it. Build resilience into your APIs:

- Graceful Degradation That Makes Sense: Design your APIs to offer reduced but functional capabilities when connectivity is limited.

- Offline Modes That Actually Work: For mobile apps and IoT devices, build in the ability to queue requests locally until connectivity returns.

- Async Patterns That Keep Things Moving: Not every operation needs an immediate response. Use asynchronous patterns to handle operations that can wait for connectivity to improve.

The Future is Edge: What's Coming Next for API Developers#

The edge computing landscape is evolving at breakneck speed. Here's what forward-thinking API developers are preparing for.

5G and Edge: A Perfect Partnership That's Finally Here#

5G isn't just about faster phones—it's creating entirely new possibilities for edge computing:

- Ultra-Low Latency That Changes Everything: 5G reduces network latency to as little as 1ms, enabling applications that seemed impossible before, from remote surgery to truly immersive AR/VR.

- Mobile Bandwidth That Actually Keeps Up: With data speeds up to 10Gbps, 5G eliminates the bottlenecks that previously limited what APIs could do on mobile devices.

- Device Density That Handles the IoT Explosion: 5G networks can support up to 1 million devices per square kilometer—perfect for smart cities and industrial IoT deployments.

According to GSMA Intelligence, 5G connections exceeded 1.5 billion by the end of 2023 and will reach 8 billion by 2026. This explosive growth is creating a foundation for edge APIs that can deliver previously impossible experiences.

Edge AI: Intelligence Where You Need It Most#

AI is breaking free from the data center and moving to the edge:

- Smart Cameras That Actually Understand What They See: Edge AI lets security cameras analyze video streams locally, only alerting you when something important happens instead of streaming everything to the cloud.

- Voice Assistants That Don't Need to Phone Home: Edge AI enables voice recognition and natural language processing right on devices, improving privacy and responsiveness.

- Personalization Without the Privacy Concerns: Retail systems can analyze customer behavior and preferences locally, delivering personalized experiences without sending sensitive data to central servers.

Common Standards: Making Edge Development Actually Manageable#

As edge computing matures, fragmentation is giving way to standardization:

- Cross-Platform Tools That Actually Work: Major cloud providers and hardware manufacturers are developing common frameworks and APIs that work consistently across different edge environments.

- Simplified Deployment That Doesn't Require a PhD: New tools are making it easier to deploy and manage applications across distributed edge locations without specialized expertise.

- Interoperability That Makes Sense: Emerging standards are enabling edge devices and applications from different vendors to work together seamlessly.

This standardization means API developers can build once and deploy across multiple edge environments, dramatically reducing development costs and time-to-market.

Supercharging Your APIs: Why Edge Computing Matters Now#

Edge computing is transforming how API developers deliver fast, reliable experiences. By processing data close to where it's created, your APIs can overcome bandwidth limitations, slash latency, and keep working even when network conditions deteriorate.

Ready to bring your APIs to the edge? Zuplo's developer-focused platform makes implementing edge-optimized APIs simple with intuitive tools and ready-to-deploy performance policies. Sign up for a free Zuplo account and start delivering API experiences that keep your users coming back.