10 Game-Changing Strategies to Supercharge Your API Gateway Performance

API Gateways serve as the front door to your applications, making their performance critical to your system's responsiveness. Performance bottlenecks at the gateway level can ripple throughout your entire system, affecting everything from user satisfaction to operational costs.

Think about it – have you ever clicked a button in an app and found yourself staring at a spinning wheel? That frustration often traces back to API performance issues. With the right optimization strategies, you can transform your gateway from a bottleneck into a performance powerhouse. Let's dive into ten powerful approaches that will help you optimize performance, reduce latency, and manage costs effectively.

10 Key Strategies to Supercharge Your API Gateway Performance#

1. Response Caching - Your First Line of Defense

2. Rate Limiting and Request Throttling - Protect Your Resources

3. High-Performance Routing - Every Millisecond Matters

4. Load Balancing with Multiple Gateway Instances

5. Global API Endpoints - Bringing Your API Closer to Users

6. API Gateway Framework Optimization

7. Payload Compression - Less Is More

8. Connection Pooling - Eliminate Connection Overhead

9. Comprehensive Monitoring - You Can't Improve What You Don't Measure

10. Microgateways for Microservices - Right-Sized Solutions

The Gateway to Better Performance#

Your API Gateway works like a traffic controller, directing requests to the right destinations while enforcing policies that keep your system secure and stable. When this controller gets overwhelmed, everything slows down – and your users notice. Here's how to keep that traffic flowing smoothly.

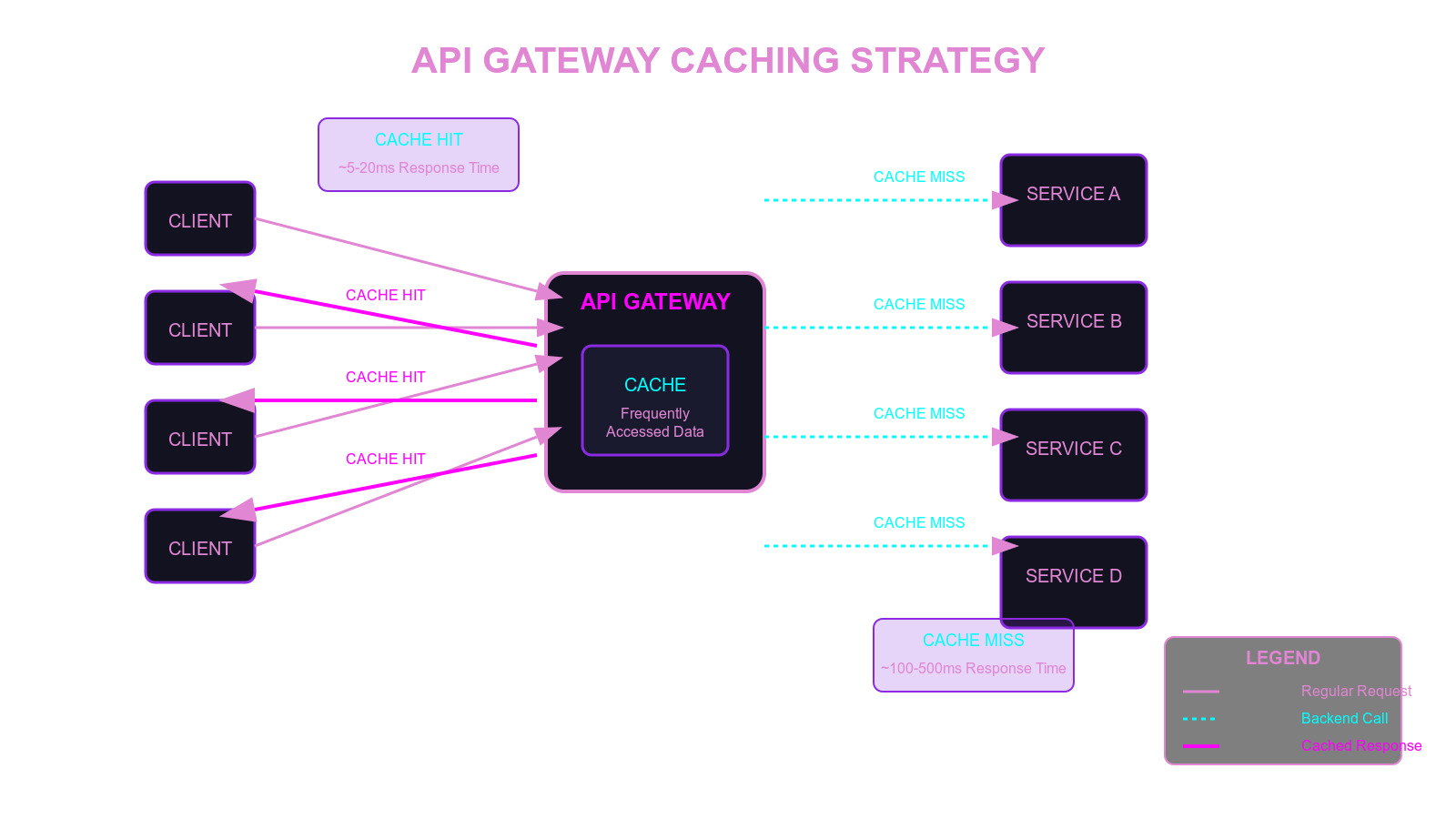

1. Response Caching: Your First Line of Defense#

Want to know the single most effective way to boost API Gateway performance? It's caching – hands down. By storing frequently requested data, your gateway can serve responses without bothering your backend services, dramatically reducing response times and server load.

Cache APIs are powerful tools in this regard. By implementing caching at the gateway level, you can take advantage of advanced caching techniques to further enhance performance.

What makes caching so powerful is its simplicity and effectiveness. For read-heavy operations where data doesn't change frequently, caching can slash response times from hundreds of milliseconds to just a few. You'll see the biggest impact when caching:

- Product catalogs and listings

- User profile information that changes infrequently

- Reference data like country codes or categories

- Public content that's accessed by many users

To get caching right, you'll need to consider:

- Cache expiration policies that balance freshness with performance

- Cache invalidation mechanisms for when data does change

- Partial caching for composite responses where only certain elements need caching

- How to manage caching policies effectively across your APIs

Not everything should be cached, though. You'll need to think about which resources make sense based on how often they change and their consistency requirements. For data that changes with every request, caching might just complicate things. Start with your highest-traffic, most stable endpoints to see the biggest immediate gains.

Advanced caching techniques, such as zone caching, can further optimize your API performance by caching responses at different layers. Additionally, exploring specific contexts—for example, learning how to cache responses when dealing with AI responses—can help in fine-tuning your caching strategy.

Most modern API gateways include built-in caching, but for advanced needs, you might want to look at dedicated solutions like Redis or Memcached.

2. Rate Limiting and Request Throttling: Protect Your Resources#

Nothing kills performance faster than an unexpected traffic surge overwhelming your system. Implementing API rate limiting and request throttling helps maintain consistent performance by preventing individual clients from monopolizing your resources.

Effective throttling strategies include:

- Setting appropriate rate limits based on client identity

- Implementing progressive throttling that gradually restricts traffic as thresholds approach

- Providing clear feedback to clients when limits are reached to balance performance and user experience

This protection mechanism serves dual purposes: it safeguards your backend services from traffic spikes while ensuring fair resource allocation among all your users. During peak periods, rate limiting helps maintain predictable performance rather than allowing the system to degrade unpredictably.

For optimal implementation, customize rate limits based on various factors such as client IP address, API key, or user account, creating different tiers of access that align with your business priorities. Developers working with NodeJS can refer to guides on rate limiting in NodeJS to effectively implement these strategies.

3. High-Performance Routing: Every Millisecond Matters#

The efficiency of your routing algorithm significantly impacts gateway performance. Modern API Gateways use advanced techniques such as radix tree-route-matching for high-speed request routing, which can substantially reduce processing time for each request.

Fast routing algorithms can shave precious milliseconds off each request by:

- Reducing the computational complexity of matching incoming requests to routes

- Minimizing memory usage during route resolution

- Accelerating pattern matching for complex routing rules

While a few milliseconds might seem insignificant, when multiplied across millions of daily requests, these optimizations add up to substantial performance improvements and cost savings.

Consider implementing smart routing strategies like pattern-based routing to reduce the number of routing rules, and context-aware routing based on request attributes for more efficient request handling.

When evaluating or implementing routing algorithms, pay special attention to their performance characteristics at scale. Some routing approaches that work well with dozens of routes can become exponentially slower when handling hundreds or thousands of routes. This is why data structures like radix trees are popular in high-performance gateways – they maintain consistent lookup speeds even as your API surface grows.

Another optimization technique is route prioritization. By configuring your most frequently accessed routes with higher priority, you can ensure the routing engine evaluates them first, further reducing average routing time. Many gateways support features like route caching and internal route handlers to improve handling routing efficiently, eliminating repeated parsing and matching operations for commonly used routes.

Scaling for Reliability and Performance#

As your API traffic grows, your gateway architecture needs to evolve. Let's look at strategies that help your gateway scale effectively while maintaining performance.

4. Load Balancing with Multiple Gateway Instances#

Enhance reliability and performance by deploying multiple gateway instances behind a load balancer. This approach ensures that if one instance fails, the load balancer can reroute requests to functioning instances, maintaining system availability.

Beyond reliability, this strategy delivers significant performance benefits by:

- Distributing traffic evenly across instances to prevent any single point of bottleneck

- Allowing for horizontal scaling as demand increases

- Enabling rolling updates without downtime

Many cloud vendors offer hosted solutions with built-in auto-scaling capabilities, eliminating the need for separate integration with other services. This automatic adjustment of resources based on traffic optimizes costs during both peak and low-traffic periods.

Over 10,000 developers trust Zuplo to secure, document, and monetize their APIs

Learn More5. Global API Endpoints: Bringing Your API Closer to Users#

For organizations with geographically dispersed users, deploying global API endpoints using services like AWS Global Network and Amazon CloudFront can significantly reduce latency. This strategy connects users to the closest region, minimizing response times regardless of their location.

The impact can be dramatic – reducing round-trip time from hundreds of milliseconds to tens of milliseconds by eliminating cross-continental network hops. This performance improvement is particularly noticeable for mobile users or those in regions distant from your primary data centers.

When implementing global endpoints:

- Deploy gateway instances in regions where you have significant user concentrations

- Configure robust health checks to detect and replace failing instances

- Implement consistent configuration management across distributed instances

Optimizing Gateway Components#

Breaking down your gateway into its core components helps identify targeted optimizations. This component-based approach enables you to focus your efforts where they'll have the greatest impact.

6. API Gateway Framework Optimization#

This might sound technical, but stick with me – it's worth understanding. Your API Gateway has three key components that you can tune for better performance:

- Core Business Module: This handles routing and forwarding. Making it more efficient means faster request processing.

- Filter Module: These are your security filters and load balancers. Streamline them to reduce overhead.

- Configuration Module: How your settings are managed affects performance. Optimize this for better resource usage.

By reducing dependencies between these components, you create a more flexible architecture that you can optimize piece by piece. This modular approach makes your gateway more adaptable while making performance improvements straightforward.

Here's a practical example: implementing persistent configuration can improve read-write operations, boosting I/O performance without requiring additional hardware. Think of it as tuning your car's engine instead of buying a more powerful vehicle – you get better performance without the extra cost.

7. Payload Compression: Less Is More#

Reduce payload sizes through compression techniques like GZIP or Brotli to accelerate data transmission. Smaller payloads mean faster transmission times between your gateway and clients, particularly beneficial for bandwidth-constrained environments or mobile users.

Effective compression can reduce payload sizes by 70-90% for text-based formats like JSON and XML, dramatically reducing transfer times and bandwidth costs. When implementing compression:

- Enable it selectively for text-based formats like JSON, XML, and HTML

- Consider skipping compression for already compressed formats (images, videos)

- Test performance impact with different compression levels to find the optimal balance between CPU usage and compression ratio

The performance gains from compression are particularly noticeable for clients on slower connections or when transferring larger datasets.

8. Connection Pooling: Eliminate Connection Overhead#

Maintain a pool of pre-established connections to backend services to eliminate the overhead of creating new connections for each request. This technique significantly reduces latency for high-volume APIs by eliminating the time-consuming TCP handshake and TLS negotiation processes.

Connection pooling can reduce the latency of individual requests by 40-100 milliseconds in many scenarios, a substantial improvement for APIs that need to be highly responsive. For optimal implementation:

- Size your connection pools appropriately based on expected traffic patterns

- Configure connection timeouts and renewal policies to prevent stale connections

- Monitor connection usage to identify potential pool exhaustion issues

The impact of connection pooling becomes even more pronounced when working with microservices architectures where a single API request may need to communicate with multiple backend services. Without connection pooling, each of these internal service calls would incur its own connection overhead, quickly adding hundreds of milliseconds to your response times.

Monitoring and Specialized Solutions#

Maintaining optimal performance requires visibility and specialized approaches for different architectural needs.

9. Comprehensive Monitoring: You Can't Improve What You Don't Measure#

Implement proactive monitoring to identify performance bottlenecks before they impact users. Regular analysis of metrics like response times, error rates, and resource utilization will help you continuously optimize your gateway's performance.

Effective monitoring strategies include:

- Establishing key performance indicators (KPIs) that matter most to your application

- Using API monitoring tools like New Relic, Postman, or AppDynamics to collect and analyze performance data

- Implementing log analysis with tools like ELK Stack or Graylog to identify patterns and trends

Remember that monitoring is a continuous process, not a one-time activity. Measure performance throughout the development lifecycle, from initial stages to post-deployment, to identify and address issues before they impact end-users.

10. Microgateways for Microservices: Right-Sized Solutions#

For microservices architectures, consider lightweight microgateway solutions that offer faster startup times and minimal runtime footprints. These specialized gateways enable quicker scaling in response to changing traffic patterns, which is particularly valuable in containerized or serverless environments.

Microgateways provide:

- Reduced resource consumption compared to full-featured API gateways

- Faster startup and scaling times for dynamic environments

- Tighter integration with container orchestration platforms

This approach particularly shines in environments using Kubernetes or similar orchestration systems, where rapid scaling and efficient resource usage are critical.

Security Without Sacrifice#

Many developers worry that adding security layers will inevitably slow down their API Gateway. However, with the right approach, you can implement robust security that enhances rather than hinders performance.

Centralized Authentication and Authorization#

Centralizing authentication and authorization at the gateway level allows you to implement security consistently without burdening each microservice with these concerns. Modern API gateways can handle security checks efficiently, validating authentication quickly to minimize latency.

By consolidating security at the gateway:

- Developers can focus on building core business logic instead of reimplementing security in each service

- Authentication tokens can be validated once and trusted throughout the system

- Security policies can be updated in a single location rather than across multiple services

Many gateways support centralized authentication using identity providers like Okta, Cognito, or Azure Active Directory, typically utilizing the OpenID Connect protocol based on OAuth 2.0.

For optimal security without performance degradation:

- Implement token caching to reduce authentication overhead for frequent requests

- Use lightweight JWT validation instead of full OAuth flows when appropriate

- Consider moving complex authorization logic to dedicated microservices for particularly complex cases

Balanced Security Implementation#

Defending your APIs shouldn't come at the cost of performance. Modern security approaches like zero trust architecture actually complement performance optimization by enforcing security at precisely the right points in your system, eliminating redundant checks.

Consider implementing a tiered security model where different levels of verification are applied based on the sensitivity of the operation. For example:

- Public endpoints might only need basic throttling and DDoS protection

- User-specific data endpoints require authentication but can use cached tokens

- Administrative or payment operations warrant full verification with fresh credential checks

This approach ensures you're not applying the same heavy security overhead to every request, allowing you to focus your security resources where they matter most.

Remember that security vulnerabilities themselves can lead to performance problems when exploited. Techniques like proper input validation at the gateway level not only improve security but also protect backend services from malformed requests that might cause inefficient processing or crashes.

Best Practices for Implementation#

So you're sold on these optimization strategies – great! But where do you start? Here's how to approach implementation without getting overwhelmed.

Start with a Performance Baseline#

Before you change anything, you need to know where you stand. It's like taking a "before" picture when starting a fitness program – you'll want to see your progress.

Gather concrete metrics on your current performance:

- Average and 95th percentile response times

- Requests per second throughput

- Error rates by status code

- Backend service latency versus gateway overhead

- Resource utilization (CPU, memory, network)

Tools like Prometheus, Grafana, and Datadog make collecting and visualizing these metrics straightforward. With this data in hand, you can pinpoint where you're spending most of your time and focus your optimization efforts there.

Adopt an Incremental Approach#

Trying to implement all ten strategies at once is a recipe for confusion. Instead, pick the lowest-hanging fruit first and make changes one at a time. This way, you can clearly see what impact each change has.

A sensible optimization journey might look like this:

- Set up basic caching for your highest-volume, most static resources

- Add connection pooling to cut down on backend connection overhead

- Configure rate limiting to shield against unexpected traffic spikes

- Introduce payload compression for responses with lots of text data

After each change, measure the impact before moving on. Sometimes a single well-placed optimization can deliver 80% of the benefit with 20% of the effort.

Test at Scale#

Your API might work perfectly during development but fall apart under real-world load. That's why thorough load testing is non-negotiable before deploying optimizations to production.

Create test scenarios that mirror your actual traffic patterns, including:

- Sustained high load that matches your busiest periods

- What happens when caches miss, or connection pools run dry

- How your system bounces back after traffic spikes

- Resource utilization during extended operation

Tools like k6, Locust, or Apache JMeter can help create these realistic tests. And remember – the closer your test environment matches production, the more reliable your results will be.

Bringing It All Together#

API Gateway optimization isn't a one-and-done project – it's an ongoing process of measuring, improving, and measuring again. The strategies we've covered give you both quick wins and long-term structural improvements for optimizing API performance. By implementing them thoughtfully and ensuring your gateway includes essential features, you'll build an API Gateway that's more responsive, costs less to run, and scales better with your business.

Ready to transform your API Gateway performance? Zuplo offers a developer-focused interface and easy-to-deploy policies for performance optimization that can quickly bridge the gap between your current state and optimal performance. Sign up for a free Zuplo account or schedule a demo and see how much better your APIs can perform.